[Lire l’éclairage en français]

Artificial intelligence (AI) has contributed to shaping the conduct of Israeli military operations in Palestine since the crisis of April-May 2021. Sparked by tensions in East Jerusalem, it degenerated into an 11-day conflict between Israel and Hamas before an Egyptian-brokered ceasefire put an end to hostilities. In this context, during the Israeli operation “Guardian of the Walls”, a senior officer in the intelligence corps of the Israel Defense Forces (IDF) declared that “artificial intelligence was a key component and power multiplier in fighting the enemy’. The Israeli army indicated that it had struck “over 1,500 terror targets” during the first month by using the Habsora system (The Gospel, in English).

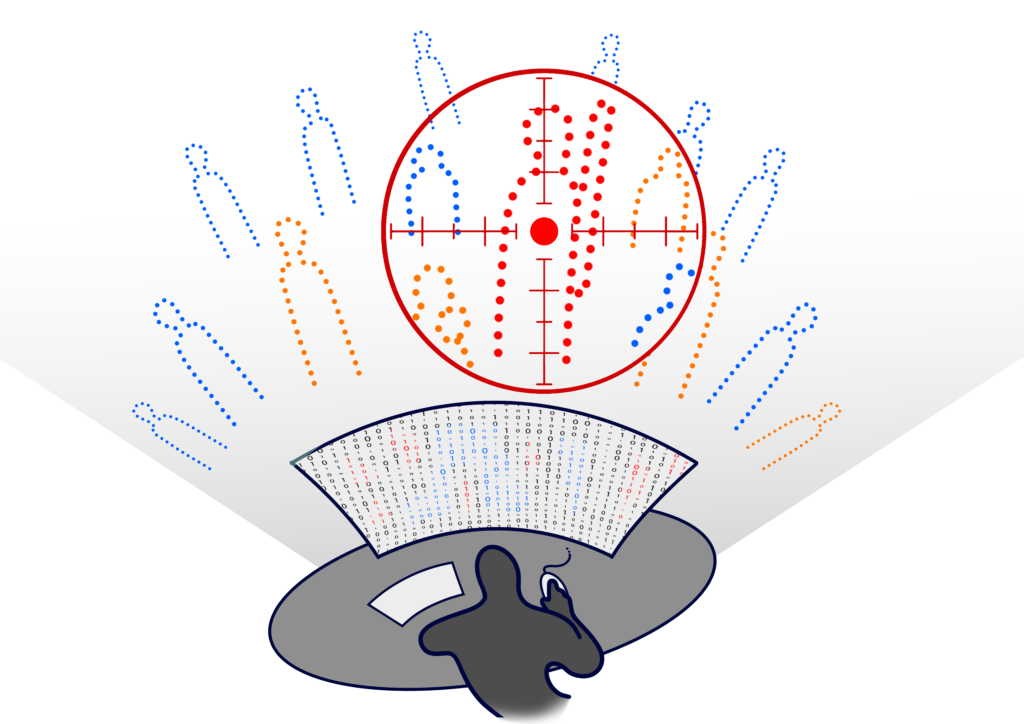

In comparison, in the first thirty-five days of the conflict in Gaza, launched after the terrorist attacks perpetrated by Hamas and the Palestinian Islamic Jihad (PIJ) on 7 October 2023, the Israeli authorities announced that they had hit more than 15,000 targets. This substantial increase in bombing is the result of the particular context of Israel’s massive retaliation. With or without AI, the destruction would perhaps have been the same; in the absence of an alternative reality, it is impossible to say. What is, however, clear is that the strikes on the Gaza Strip have been characterized by an increased use of AI for the determination of targets. The Habsora system has revolutionized the targeting process by generating up to 100 targets per day, whereas human analysts used to identify around 50 per year. In April 2024, the independent Palestinian-Israeli investigative newspaper +972 Magazine revealed that two additional AI-based systems – Lavender and Where’s Daddy – also played a key role in automating the process of target selection and their geolocation.

« However, these promises of precision contrast with reality, as the use of AI has resulted in significant civilian casualties »

The use of AI in Gaza has been heavily criticized by the press, non-governmental organizations (NGOs) as well as United Nations (UN) experts. In a statement issued by the Israeli army to legitimize the use of these technologies, a senior intelligence official stated that this system allowed the identification of targets for precision strikes “causing great damage to the enemy and minimal damage to non-combatants’. However, these promises of precision contrast with reality, as the use of AI has resulted in significant civilian casualties. While the exact toll remains uncertain, Gaza authorities reported over 45,000 deaths as of 16 December 2024.

Automation of decision-making in question

How can it be explained that, despite the massive destruction observed, the number of civilian casualties caused, and the demonstrations of force orchestrated by Hamas in the context of hostage and prisoner exchanges, the use of AI for targeting is justified as an operational advantage even though it raises major ethical concerns linked to the automation of decision-making?

To answer this question, the present text proceeds in three steps. First, it presents the operating principles of Israel’s three AI systems, and the abuses associated with them. Second, it discusses the ethical issues related to the lack of human control and the conditioning of the decision-making process. Third, it addresses the need to distinguish between the notions of “meaningful human control” and “nominal human input” as highlighted in the communication from the State of Palestine to the UN.

Skip to PDF content